Comparing the new Redis6 multithreaded I/O to Elasticache & KeyDB

People often ask what is faster, Elasticache, Redis, or KeyDB. With Redis 6 on the horizon with multithreaded io, we felt it was a good time to do a full comparison! This blog compares single node performance of Elasticache, open source KeyDB, and open source Redis v5.9.103 (6.0 branch). We will take a look at throughput and latency under different loads and with different tools.

A Brief History#

KeyDB was introduced nearly a year ago as a multithreaded fork of Redis with major performance gains. Soon after Amazon announced their enhanced io handling for Redis using Elasticache. Redis is now in the process of releasing their multithreaded io option in version 6.0. Unlike Redis6 and Elasticache, KeyDB multithreads several aspects including placing the event loop on multiple threads, with network IO, and query parsing done concurrently.

So there are 3 offerings by 3 companies, all compatible with eachother and based off open source Redis: Elasticache is offered as an optimized service offering of Redis; RedisLabs and Redis providing a core product and monetized offering, and KeyDB which remains a fast cutting edge (open source) superset of Redis. This blog looks specifically at performance, however there is a section at the end of this blog that also outlines other key differences.

Testing#

For testing we are using Memtier which is a benchmarking tool created by RedisLabs, and YCSB which is Yahoo’s benchmarking tool for comparing many popular databases available. We did not use KeyDB’s built in benchmark to avoid the appearance of bias, but also due to throughput limitations for this test. All results shown here can be reproduced by anyone using AWS. There is a section at the end of this blog detailing how to reproduce tests and factors to consider to avoid bottlenecks/test variance/skews.

Throughput Testing with Memtier#

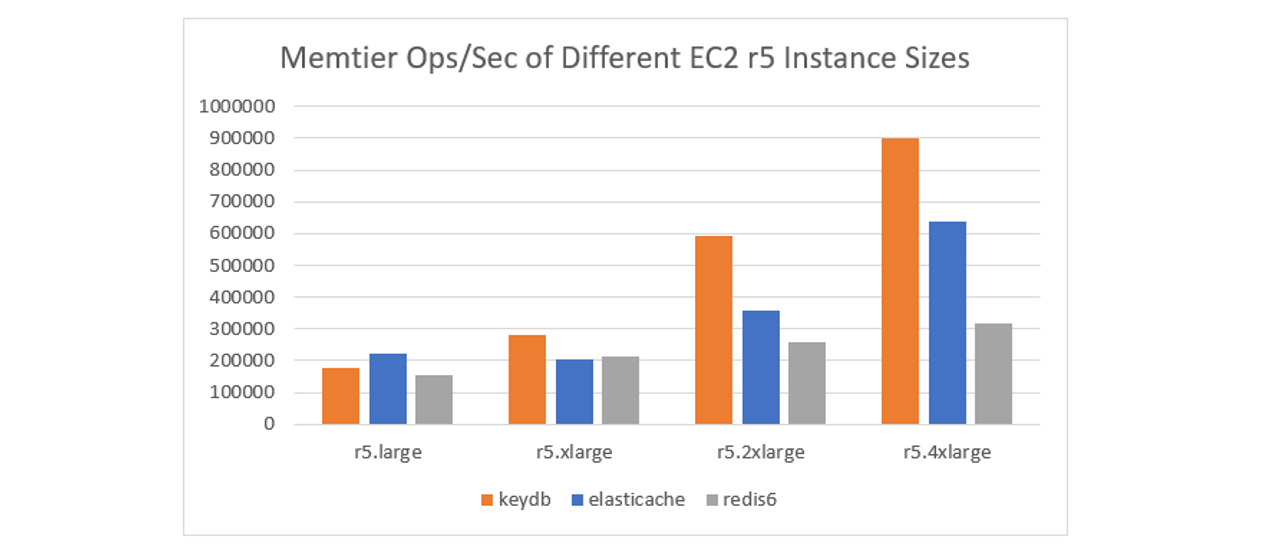

Memtier is a benchmark tool designed by RedisLabs for load testing Redis. It is good at producing high throughput and it is also compatible with KeyDB and Elasticache. The test below is run on several different sizes of AWS r5 instances.

We run as high as 8 server-threads with KeyDB and 8 io-threads with Redis. We found anything above 4 io-threads with Redis often does not see further improvement, however we tried different combinations and used the threadcount showing best throughput.

Elasticache is a service managed by AWS so we leave it up to them to provide the optimized configuration.

All tests were performed in the same area zone over private IPs, and averaged over several tests. You can see the end of this blog for details on reproducing these benchmarks.

The chart below shows the throughput in ops/sec using a loaded database:

As you can see KeyDB shows significant performance throughput gains over Redis and Elasticache as more cores become available. Even with multithreaded io on Redis6, it still lags behind KeyDB and Elasticache with a lower ability to scale vertically.

On the r5.large instance Elasticache had slightly higher performance, but we noticed a drop in performance for Elasticache going to the r5.xlarge (over several tests at different times). We are not sure of the reasons behind this.

Latency numbers with memtier end up being several milliseconds because its measuring this at the maximum throughput of the machine/node. It is not representative of average loads or even high loads, but moreso worst case scenario. For a good relative comparison in testing latency we look to YCSB to measure latency at given loads and throughput.

Throughput Testing using YCSB#

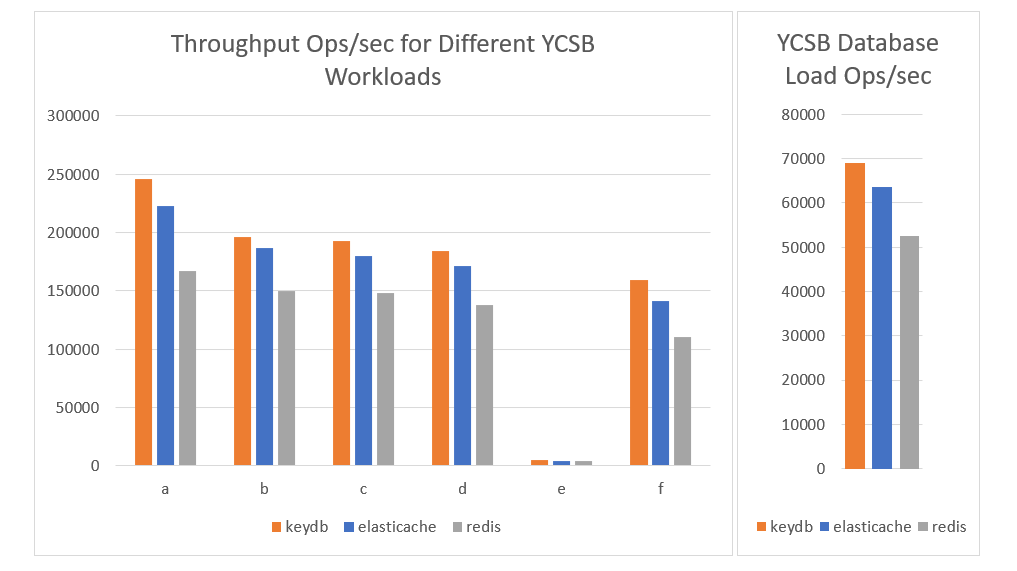

YCSB is a benchmarking tool by Yahoo which is compatible with many of the popular databases available today. This tool has a lot of flexibility with different ‘workloads’ available representing more real-life loading scenarios.

The testing below looks at the max throughput while running several different workloads:

- Workload A: Update heavy workload (50:50 read/write)

- Workload B: Read mostly workload (95:5 read/write)

- Workload C: Read only

- Workload D: Read latest workload - most recently inserted records are the most popular

- Workload E: Short ranges – ie. threaded conversations

- Workload F: Read-modify-write

Please follow this link for full descriptions of the workloads stated above

The chart below looks at the maximum operations per second for several workloads on a preloaded dataset. The ‘Load Ops/sec’ is from loading the workload ‘a’ dataset. This dataset is used for all workload tests per YCSB recommendation

With YCSB it is evident that KeyDB achieves the highest throughput under every workload tested. Elasticache comes in second followed by Redis6. It can be noted that Redis6 is still faster than version 5 without io-threads.

Latency Testing with YCSB#

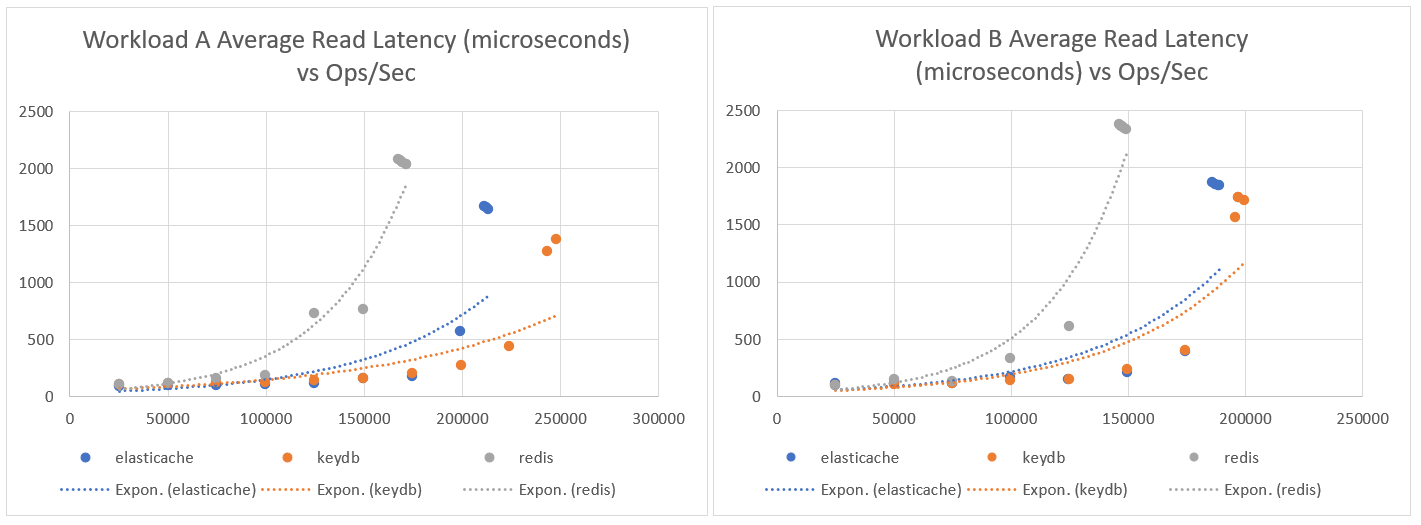

YCSB is a great tool for measuring latency. It allows you to specify a target throughput, and measures the latency associated at that traffic volume. Under different loads it offers good visibility into how your database might perform under different workloads at different traffic. It can also help a lot when selecting a machine size to use with your instance.

The test below looks at ‘workload a’ which is 50/50 read/write, and ‘workload b’ which is mostly writes. UPDATE latency trends look similar to the READ latency trends shown below. These wokloads would be commonly used when running a cache layer database.

Keep in mind that latency measurements are in microseconds, and lower values are better

The trend displayed above shows that latency rises significantly as an instance approaches its capacity throughput. KeyDB can handle significantly more throughput that Redis6 and slightly more than Elasticache so is able to maintain lower latencies at higher loads.

KeyDB, Elasticache and Redis 6 all have similar latencies when under light to moderate traffic. Its not until capacity starts to hit higher loads where the difference is seen. The trend above looks very similar when run on workloads a/c/d/e/f but with slightly different magnitudes. The behavior here is the important takeaway

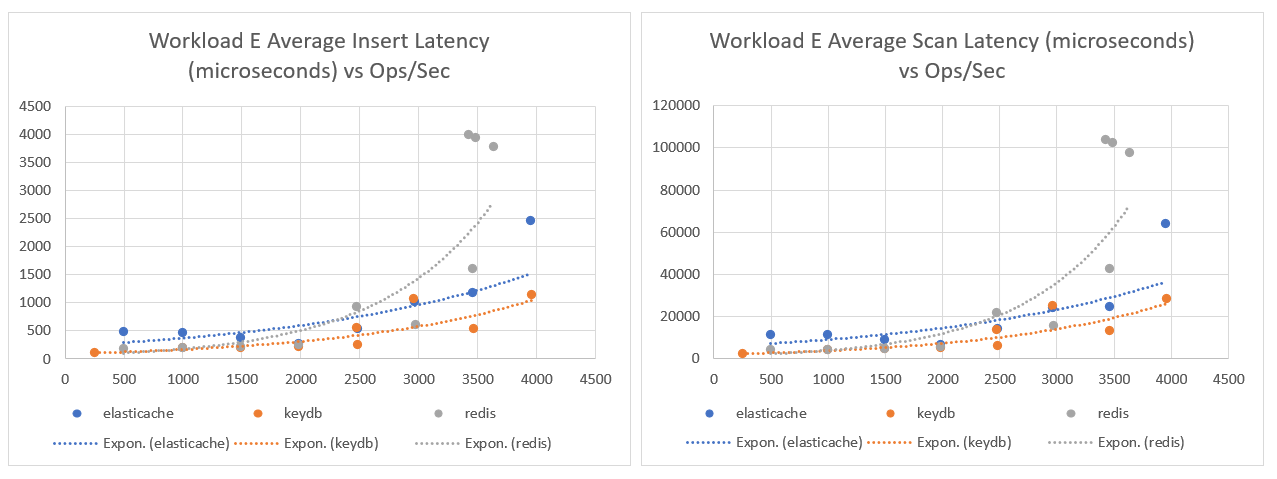

‘Workload e’ is the most different in response, so we will also show the latency trend below. Workload e as described by YCSB might be common for “threaded conversations, where each scan is for the posts in a given thread”.

As you can see the behavior of the trend is similar, however due to the nature of the workload the dataset is different than the others. KeyDB still maintains lower latency.

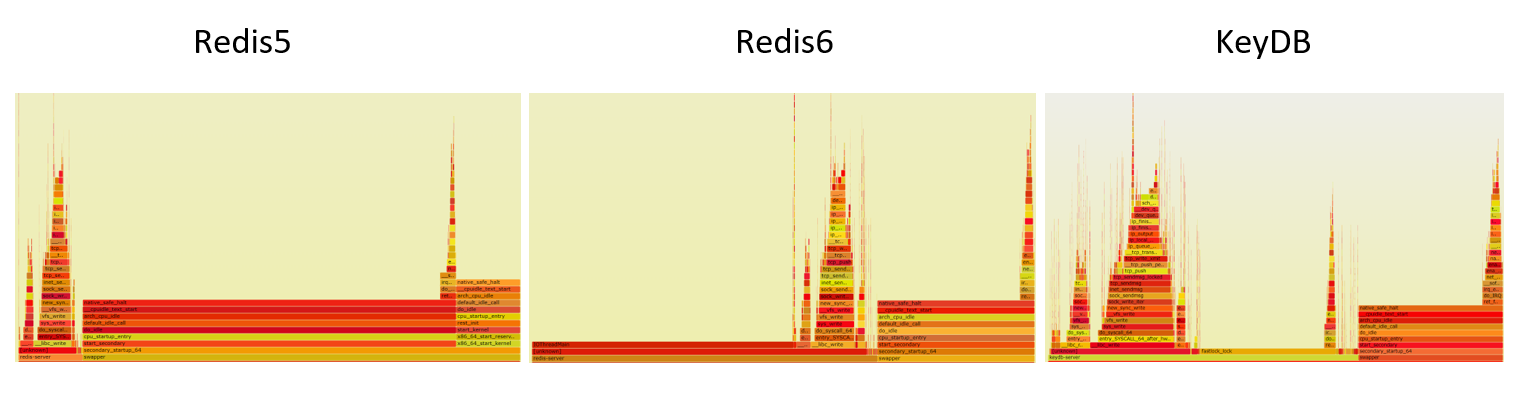

FlameGraphs#

For those of you who like flame graphs, and looking for some visual representation, we ran some FlameGraphs between Redis5, Redis6 and KeyDB for you to take a look at. We do not have access to the Elasticache server so could not run them for it. These FlameGraphs were run during the benchmark tests. You can find links to the SVG files below to take a closer look and see the breakdown.

memtier-redis5, memtier-redis6, memtier-keydb, ycsb-redis5, ycsb-redis6, ycsb-keydb

How are KeyDB, Elasticache, and Redis Different?#

Each offering started as being built off open source Redis, and maintains full compatibility with it. However each offering has gone in slightly different directions.

So other than performance, what are some other key differences between the offerings?

KeyDB:

- KeyDB is open source. We are focused on memory efficiency, high throughput and performance with some major improvements queued up for the near future. This allows us to provide features that would otherwise never become a part of open source Redis. We focus on simplifying the user experience offering features such as active replication and multi-master options. We also have additional commands such as subkey EXPIRES and CRON

- The KeyDB Pro offering includes MVCC, FLASH support, among others

Elasticache:

- Elasticache offers their own fork of Redis as an optimized service. This is a paid service available only through AWS so you cannot run it on prem. Because it is fully hosted the instance management, replicas and cluster is handled by AWS with limited high level decisions by the user. Configuation parameters are also out of your control.

Redis:

- Redis is open source and has been around the longest. It has a minimalistic approach towards the code base which differs from KeyDB.

- RedisLabs is the paid version of Redis which offers a fully hosted solution as well as proprietary modules. RedisLabs offers FLASH with their paid version and it can be hosted on prem. Pricing however can be quite high.

Multithreading Differences#

While Redis6 now has multithreaded IO and Elasticache uses its enhance network IO threading, KeyDB works by running the normal Redis event loop on multiple threads. Network IO, and query parsing are done concurrently. Each connection is assigned a thread on accept(). Access to the core hash table is guarded by spinlock. Because the hashtable access is extremely fast this lock has low contention. Transactions hold the lock for the duration of the EXEC command. Modules work in concert with the GIL which is only acquired when all server threads are paused. This maintains the atomicity guarantees modules expect.

Reproducing Benchmarks#

We don’t have access to config get <> through the client to see what elasticache configuration is. However looking at the setup, elasticache relies on replicas for data backup and offers things like ‘daily backups’ as an rdb option to s3. Looking at info persistence you can see that background saving does not occur during benchmarks as is standard in keydb.conf or redis.conf. Our typical benchmarks stay to defaults including background saves, however for the purposes of fairness in this testing we have disable background saving with --save “” option passed to Redis and KeyDB. In most cases this is not noticeable.

To help optimize Redis, and following their recommendations, we also updated the following linux parameters:

- Changed /proc/sys/net/core/somaxconn to 65536

- echo never > /sys/kernel/mm/transparent_hugepage/enabled

- sysctl vm.overcommit_memory=1

Avoid bottlenecks#

- Because of KeyDB’s multithreading and performance gains, we typically need a much larger benchmark machine than the one KeyDB is running on. We have found that a 32 core m5.8xlarge is needed to produce enough throughput with memtier. This supports throughput for up to a 16 core KeyDB instance (medium to 4xlarge)

- When using Memtier run 32 threads.

- Run tests over the same network. If comparing instances, make sure your instances are in the same area zone (AZ). Ie both instances in us-east-2a

- Run with private IP addresses. If you are using AWS public IPs there can be more variance associated with the network

- Beware running through a proxy or VPC. When using such methods, firewalls, and additional layers it can be difficult to know for sure what might be the bottleneck. Best to benchmark in a simple environment (within same vpc) and add the layers afterwards to make sure you are optimized.

- When comparing different machine instances ensure they are in the same AZ and tested as closely as possible in time. Network throughput throughout the day does change so performing tests close to eachother provides the most representative relative comparison.

- KeyDB is multithreaded. Ensure you specify multiple threads when running

KeyDB#

All our tests are performed with the following parameter passed:

Keep in mind our security group does not expose port 6379 to the public but only to our testing instance. You can add in a password with the --requirepass mypassword parameter.

Redis#

With Redis6 you will need to use the new io-threads parameter. You can set this as high as you want. We found best results between 4-8, however keep in mind the number of cpu’s available on your machine when setting

Elasticache#

You can start here: https://aws.amazon.com/elasticache/. Go to “create cluster” and keep in mind the following parameters:

- Select ‘Redis’ and ensure ‘cluster mode enabled’ is deselected

- Set your instance name and version 5.0.6 (latest) compatibility

- Select your machine size/type and set replicas to 0

- Select your AZ to the same as the benchmark machine

- Once you have created the machine you can use your ‘primary endpoint’ as the host address to connect with

Memtier#

Ensure you are running on at least an m5.8xlarge. Install memtier via their instructions here You can start by loading the dataset with

Then run a normal test with:

You can play with other parameters and options. See --help option for details.

YCSB#

Please refer to both the github repo for installation and the wiki for more details on using YCSB, workloads, loading, running, etc. Below are the basic commands we used to produce benchmark results. See the wiki for more info.

We first load data, then perform tests. Default settings are small so we specify a recordcount of 10 million and an operationcount of 10 million for our tests. See workload properties here.

Benchmarks can be sensitive to network latency with a low number of clients. For fairly consistent results and max throughput we use 350 client threads. Your results will be output to the file you specify.

All YCSB test performed in this blog were completed on a r5.4xlarge instance (testing machine), with the benchmark machine being the m5.8xlarge.