Running KeyDB on Arm-based Amazon EC2 A1 Instances for the Best Price/Performance

We’ve talked to many of our users who were looking to optimize their cost on AWS. While most had experimented with the many available x86 based instance types we were surprised that few had tried the Arm-based Amazon EC2 A1 instances. These Arm-based instances come with unique performance advantages for multi-threaded cache server workloads. To understand why cache databases, and KeyDB specifically, is uniquely suited to Arm we have to first understand a little about the hardware.

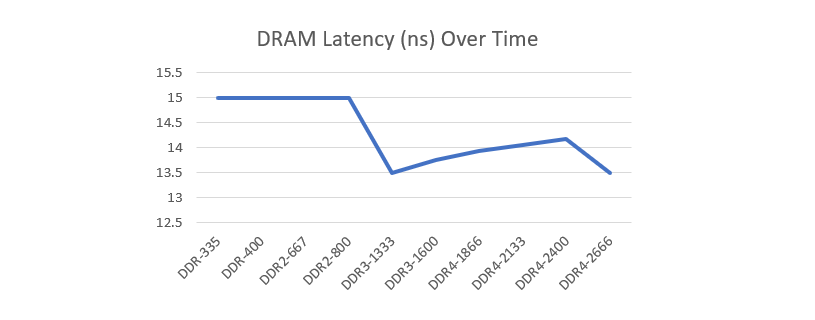

While CPU performance has been growing exponentially since the early 2000’s memory latency has been stagnant. A DDR1 module purchased in 1998 has 15ns of latency, while a modern DDR4 module purchased today has at best 13.5ns - an improvement of only 10% in 20 years . The implications for software developers are clear – keep as much data in the cache as possible and ensure the CPU is able to prefetch aggressively.

This poses a problem for cache databases like KeyDB. Our multi-gigabyte datasets are far too large to fit in the cache, and accesses are dependent on external requests which cannot be easily prefetched. As a result, our modern ultra-fast processors spend much of their time idle waiting on DRAM. In this environment single threaded performance is limited by DRAM latencies and the total number of cores becomes the dominant factor in overall performance.

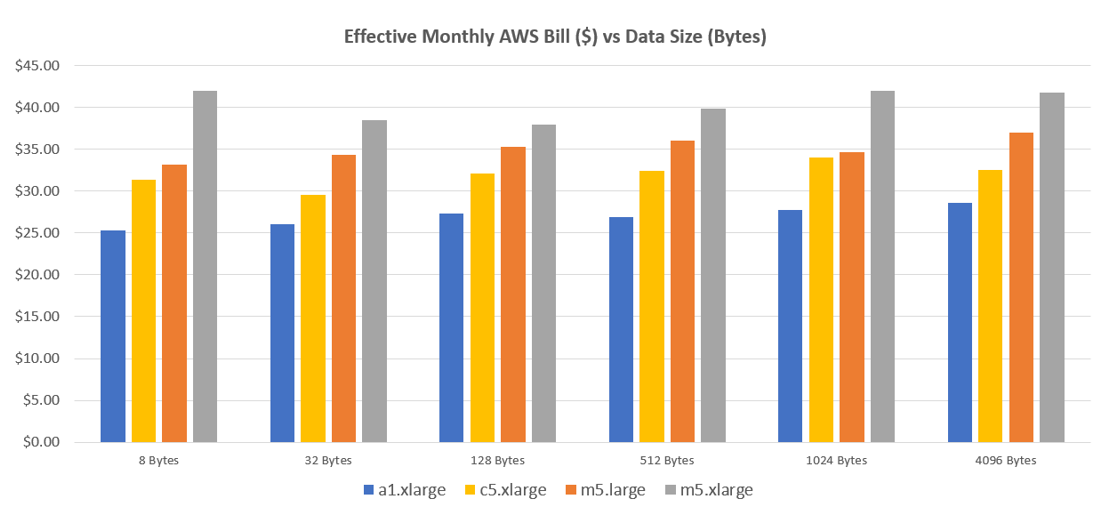

This is the type of workload where Arm and Amazon EC2 A1 instances in particular shine. With costs per core up to 45% lower you ensure you are paying for performance you can actually use. As the chart below shows, empirical testing bears this out with up to 30% cost savings shown in real world testing:

The chart above shows the effective running cost per month normalized to achieve 250,000ops/sec on various AWS EC2 instance types’ vs the size of data fetched. We can see that the Arm-based EC2 A1 instances maintain a cost advantage throughout. The monthly cost is calculated using reserved instance pricing and is calculated as: [(250,000op/sec) / (benchmarked ops/sec of the instance)] x (EC2 cost/hr) x (24hrs) x (30 days). This normalizes a cost comparison to evaluate cost per throughput. Regarding performance results, the a1.xlarge (4core, 8GB) gets about 30% more ops/sec than the m5.large (2core, 8GB), and about 30% less than the m5.xlarge (4core, 16GB) and c5.xlarge (4core, 8GB), however when looking at the volume served per dollar spent , the A1 instances start to reap noticeable benefits.

In this benchmark it becomes clear that while x86 cores may individually be faster – for cache-based workloads it is the total number that is most important – a situation where Arm becomes far more economical.

Because of this inherent cost advantage, we’ve spent a lot of time optimizing KeyDB for Arm, and our multithreaded architecture allows KeyDB to get the most from this type of hardware. If you are looking to reduce costs in your caching layer KeyDB paired with Amazon EC2 A1 instances is strong combination.

Here are some links to learn more about KeyDB or Amazon EC2 A1 Instances.

To check out the KeyDB open source project on Github click here

1. https://www.crucial.com/usa/en/memory-performance-speed-latency